Identity: The Operating System of AI Security

Securing the AI Enterprise: An Identity-First Approach Blog Series

AI agents are transforming how enterprises operate—but without proper governance, they're also creating unprecedented security risks. In this five-part series, Saviynt CPO, Vibhuti Sinha, will explore a complete framework for developing strong identity-driven AI governance. We'll start by examining why identity must become the operating system of AI security, then dive deep into Posture Management for discovering and monitoring AI agents, Identity Lifecycle Management—from registration to retirement, Access Management for runtime control, and Audit & Provenance for building trust through evidence. Each post builds on the last, creating a comprehensive approach to making AI not just powerful, but governable.

Post 1 - Identity: The Operating System of AI Security

Author: Vibhuti Sinha, CPO

Trust Is the New Barrier to AI Adoption

Over the past year, I've facilitated dozens of workshops with CISOs, architects, and security leaders, and I keep witnessing the same paradox: the excitement for AI's transformative potential matched only by the anxiety over trust. These aren't technologists asking if AI can revolutionize their operations—they already know it can. The questions that keep surfacing are far more urgent: Can we trust AI to behave responsibly? How will it be governed, auditable, and secure?

The answer I keep returning to is identity. Identity, already serving as the control plane for human users and machine accounts, must evolve to become the control plane for AI agents, models, and orchestration systems. Without this foundation, scale doesn't mean progress. It means chaos.

AI, Not as One Identity But Many

When enterprises adopted cloud services a decade ago, each new application created a new identity category, leading to sprawling complexity. AI, however, doesn't just add to this complexity—it multiplies it. Modern AI ecosystems introduce entirely new identity classes and entities, each with distinct characteristics:

- AI Agents act as copilots, workflow bots, and reasoning engines that make autonomous decisions.

- MCP (Model Context Protocol) Servers function as orchestration layers between agents and models, managing context and routing.

- Model Endpoints serve as APIs delivering LLMs with specific versioning and context requirements.

- Agent Frameworks and Registries—platforms like LangChain, AutoGen, and CrewAI—define agent behavior and capabilities at scale.

The new agentic identity types carry unique access patterns, lifecycle needs, and attack surfaces. They authenticate to systems, consume sensitive data, initiate actions across your infrastructure, and often interact autonomously without human oversight.

Gartner predicts that by 2027, 50% of enterprise business decisions will be augmented or automated by AI agents.

This isn't science fiction, it's a fast-approaching reality that's already beginning to materialize in forward-thinking enterprises. Without identity-first governance, the risk grows exponentially with each newly deployed agent.

The Explosive Risk Surface: AI's Unique Blast Radius

When a developer's credentials are compromised, you rotate keys, revoke access, and change the locks. The blast radius, while serious, is contained. But when an AI identity is compromised, the stakes escalate dramatically. Consider the cascading effects:

- Privilege chaining allows a single hijacked AI identity to propagate access across interconnected systems in seconds, not hours.

- Prompt injection attacks enable malicious prompts embedded in one workflow to ripple into critical downstream processes, potentially affecting everything from customer service to financial transactions.

- Shadow AI agents—rogue or forgotten instances quietly operating in your environment—can access sensitive corporate data without anyone noticing until it's too late.

What's the antidote to this nightmare scenario? Identity governance: comprehensive registration, rigorous lifecycle enforcement, and runtime policy controls. Without these foundational elements, AI becomes a fault line running through your security architecture.

Identity as the AI Operating System

We've largely accepted the principle that "identity is the perimeter" in modern security architectures. For AI, identity must become something even more fundamental—the governance fabric itself:

- Every AI agent, MCP Server and Tool must be registered in your identity system—no exceptions, no bypassing

- Every entitlement and tool must be governed—not just API keys, but the actions those keys enable

- Every decision must be auditable and provable, with traceable prompts, policies, and outputs

Identity isn't just another security layer to bolt onto AI systems. It's the Operating System of AI security—a centralized nervous system that underpins all governance pillars and ensures accountability at every level.

The Five Pillars of Identity-Driven AI Governance

Through countless workshops and real-world implementations, five pillars have consistently emerged as the essential path to securely governing AI identities:

1. Posture Management (You cannot protect what you don't see)

Start with comprehensive discovery—you need to know every AI identity operating in your environment, whether sanctioned or shadow. Establish visibility by mapping actual resource access across your infrastructure. Implement timeline-based tracking to maintain historical records of who accessed what, and when.

Consider this potential risk moment: A customer support bot that suddenly begins querying HR databases flags a potential breach instantly. Without posture management, this anomaly might go undetected for weeks.

2. Identity Lifecycle Management (Every Agent is an identity. Every identity needs governance)

Enroll AI agents and MCP servers through formal registration services, just as you would human employees. However, the registration process could be completely invisible, automated, and embedded in your agent development pipelines. Assignment of least-privilege policies is a must — no agent should launch with broader access than necessary. Enforce continuous certifications, access reviews, and offboarding workflows that might mirror your processes for human users but is predominantly agentic, invisible and automated.

Missing Identity Lifecycle Management (ILM) could result in an uncertified finance bot receiving a capability upgrade but retaining its privileged access to sensitive financial systems. Without lifecycle controls, this dangerous combination persists indefinitely.

3. Access Management

Shadow AI risks spiral unchecked without proper gatekeeper controls in place. Deploy runtime access gateways that verify and validate each AI request against current policy. Enable scoped delegation tokens for agent-to-agent workflows, ensuring that access is contextual and time-bound.

An example of a best practice would be a development bot that can read source code repositories but cannot push changes to production environments without a human in the loop making the necessary decisions. Enforcement of authorization checks at run time is an absolute must for designing identity security systems for agents.

4. Audit & Compliance

Maintain agent activity that ties every AI action to specific run IDs, creating an unbreakable chain of accountability. Generate audit-ready posture reports that can definitively show whether AI agents accessed personally identifiable information, or even events such as ownership changes, guard rail changes or any kind of security configuration changes.

A key use case is where your access timeline becomes a critical audit artifact during compliance reviews, proving exactly which agents accessed regulated data along with the authorization policies used.

5. Provenance & Accountability (Autonomous behavior of agentic workflows make this really hard)

Building comprehensive provenance chains in agentic workflows both for impersonation and delegation scenarios is an absolute must to achieve decision attribution and demonstrate accountability. Retain human accountability alongside explainable AI agent actions—automation shouldn't obscure responsibility. Provide transparency that regulators and customers increasingly demand, offering full context rather than just outcomes.

Here’s an example involving loan approvals: When an AI system denies or approves a loan application, you must be able to show the exact policy, data inputs, and agent or group of agents reasoning that led to that decision. Without provenance, you're flying blind into regulatory scrutiny.

Weaving Identity into AI Systems – Security Fabrics

Imagine an architectural hub—an Identity Security Fabric—that weaves together all these pillars:

A Posture Engine continuously discovering and inventorying agents.

- A Lifecycle Service registering and applying policies to each agent throughout its operational lifespan.

- An Access Gateway enforcing runtime checks before any action executes.

- An Audit Ledger recording actions immutably for compliance and forensics.

- A Provenance Graph anchoring every output to its origins.

This fabric integrates seamlessly in your ecosystem to protect all identities including humans, non-humans and now AI agents. The missing piece in most architectures today? Identity-first governance specifically designed for AI.

Why This Matters—Not Just Tech, But Strategic

Each of the scenarios listed throughout this post underscores an inescapable truth: identity is where AI security begins and ends.

AI is here, and enterprises stand at a critical inflection point. Those that build identity-first structures—governing posture, lifecycle, access, audit, and provenance—will define competitive advantage for the next decade. Others will find themselves trapped in perpetual compliance catch-up mode, reacting to breaches and regulatory penalties.

Companies that fail to evolve their identity infrastructure stand to lose far more than data. They risk losing customer trust, regulatory favor, and brand integrity—assets that, once damaged, take years to rebuild.

Closing Thought

We've been here before—with cloud adoption, SaaS proliferation, and container orchestration. It took years, and often painful security incidents, for the industry to realize that identity must underpin security architecture. AI accelerates faster than any technology wave before it. We don't have years to figure this out

Embedding identity at the heart of AI governance isn't optional anymore—it's imperative. The organizations that act now will transform AI from existential risk into strategic trust advantage. And they'll do it with identity at the core.

Coming Up: In our next post, we'll dive into the first critical pillar: Posture Management. We'll explore how to discover Shadow AI lurking in your environment, establish comprehensive visibility, and build risk scoring that lets you prioritize what matters most.

Thanks for reading!

Related Post

12 / 19 / 2025

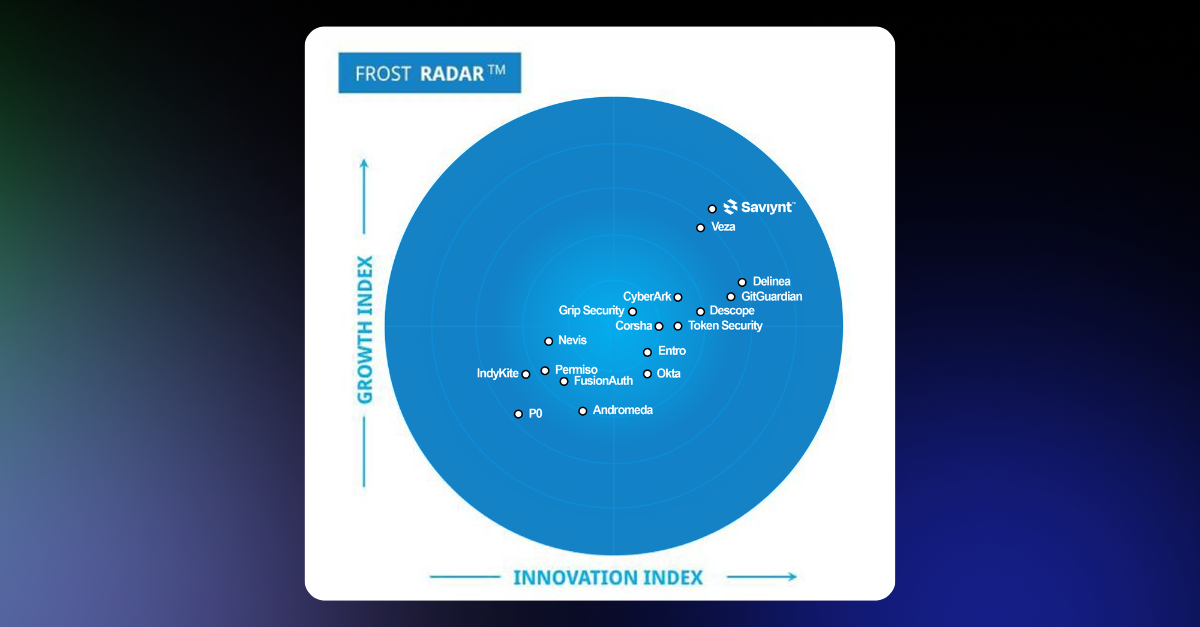

Saviynt Recognized as a Leader in Frost Radar™: Non-Human Identity Solutions, 2025

READ BLOG

Report

Saviynt Named Gartner Voice of the Customer for IGA

EBook

Welcoming the Age of Intelligent Identity Security

Press Release

AWS Signs Strategic Collaboration Agreement With Saviynt to Advance AI-Driven Identity Security

Solution Guide