You Can’t Govern what you Can’t See - Posture Management for AI Agents

In the first post in this series, we established that identity must serve as the operating system of AI security, introducing five essential governance pillars. Now we dive into the foundation that everything else depends on: Posture Management. You cannot secure what you cannot see—and most enterprises have no idea how many AI agents are currently operating in their environments.

Introduction: Visibility Before Control

One of the most striking "aha" moments I've witnessed among CISOs and enterprise architects over the last 12 months is this realization: AI security begins with posture visibility.

It sounds deceptively simple, but history repeatedly validates this truth. When cloud adoption exploded a decade ago, the first challenge wasn't deploying better firewalls—it was establishing visibility. Security teams desperately needed to know which cloud applications existed, which workloads were deployed, and who controlled them. The same pattern repeated itself with SaaS proliferation and container orchestration.

Today, we're watching the same problem resurface—this time amplified by AI. Shadow AI has become ubiquitous across enterprises:

- Employees casually connect copilots to sensitive data repositories without IT approval

- Developers spin up MCP (Model Context Protocol) servers to orchestrate complex workflows

- Business teams adopt plug-and-play agents from frameworks like LangChain and AutoGen

The reality is sobering: 92% of organizations lack full visibility into AI identities, and 95% doubt they could detect misuse if it happened.*

Until organizations discover and continuously monitor their AI identities, any attempt at governance becomes wishful thinking. Posture management serves as the foundational layer that lifecycle governance, access control, audit capabilities, and provenance tracking all depend upon.

The Scope of the Problem: Shadow AI Everywhere

When I ask CISOs "How many AI agents are active in your environment today?", the answers reveal a troubling inconsistency. Some confidently say five. Others estimate fifty. But many simply admit the uncomfortable truth: "We honestly don't know."

This uncertainty mirrors the early days of SaaS adoption. Marketing departments would swipe a corporate credit card for Salesforce or Dropbox long before IT had a chance to evaluate or approve it. Now AI is following the same trajectory, but with significantly higher stakes.

Here are some examples of Shadow AI risks:

- Unregistered copilots connecting directly to Salesforce or Workday without policy controls

- Orphaned agents created for proof-of-concept experiments, never formally decommissioned

- MCP servers exposing critical integration points without enforcement mechanisms

- API keys stored in development scripts connecting agents to sensitive enterprise systems

That's not just a blind spot in your security program—it's an open attack surface waiting to be exploited.

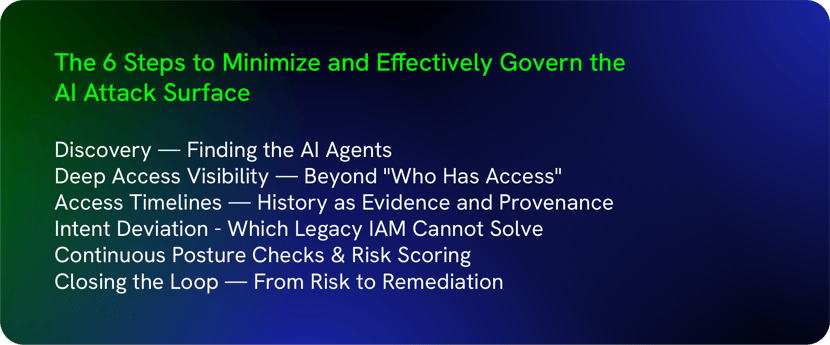

Minimizing and governing this attack surface starts with a systematic approach as AI agents act fundamentally different from traditional, human and non-human identities. Following are six core steps organizations can use to minimize and effectively govern this attack surface.

Step 1: Discovery — Finding the AI Agents

The first step in effective posture management is discovery. This process proves far more complex than traditional IAM discovery because AI agents don't behave like human users or conventional service accounts. There are several techniques to identify agents, including:

- Declared Discovery

- Discover leading to agent registration and metadata ingestion - With pro code, low code and no code platforms including LangChain, CrewAI, AWS Bedrock, AWS Agentcore, GCP Vertex AI, Microsoft Foundry, Salesforce Agentforce, ServiceNow and many more. This only finds declared/known agents, not shadow agents.

- MCP Registry Integration which leverages the fact that MCP servers often act as brokers—integrating with them provides a partial inventory of registered agents.

- Behavioral Discovery

- Network Traffic Analysis that involves scanning outbound traffic for connections to known AI providers like OpenAI, Anthropic, Google Gemini, and AWS Bedrock. This finds agents' activity and not the agents themselves. It also does not identify intent, ownership or lifecycle and requires identity correlation.

- Run time behavioral profiling to detect non-deterministic execution loops, tool calling chains, privilege escalation patterns also help to determine hidden agents.

- Identity based discovery

- Identity Analytics that search for entitlements linked to “non-human” identities within existing IAM and IGA systems.

- Plugin & App Store Monitoring able to catch instances where teams add copilots or connectors without IT approval.

- Code and pipeline discovery - Scan code pipelines, source code repositories etc. to find dormant and pre-production agents

- Delegation and chain discovery - Agents often run on behalf of humans/other agents/applications and thereby understanding token exchanges, OBO / Impersonation flows is crucial to discover such invisible tokens

Here’s a case in point: During one workshop session, a Fortune 500 company discovered more than 30 unsanctioned copilots actively querying HR and finance data. None of these agents appeared in IAM systems because they authenticated using shared API keys that bypassed traditional identity controls.

Step 2: Deep Access Visibility — Beyond "Who Has Access"

Traditional identity visibility stops at the perimeter: which systems does this identity have access to? For AI governance, that surface-level view is dangerously incomplete. The more critical question becomes: "What can they actually do with that access?" Enterprises need granular visibility into what actions AI agents can take inside a system: the specific actions, data queries, and operations each agent is authorized to perform. AI governance cannot be seen as traditional governance simply extended to AI agents.

Consider a customer support copilot as an example. It might have permission to read ticketing data (relatively low risk), update customer profiles (medium risk), or issue refunds (high risk).

This distinction is critical for accurate risk assessment. If you only map access at the system level, you completely miss the action-level privileges that determine actual risk. There are several technical best practices to help organizations gain the access visibility needed to identify AI access to critical systems, including:

- Ingest logs into an identity security data lake that aggregates activity across your environment.

- Build comprehensive access maps that link AI identities to specific actions and data stores.

- Enrich this data with contextual metadata including agent owner, model version, and hosting platform details.

- Most critically, continuously detect intent deviation—the moment an agent’s behavior diverges from its declared or authorized purpose, revealing the true risk inherent in agentic systems.

Each of these allows enterprises to see not just connections between agents and systems, but the full spectrum of capabilities each agent possesses as well as when the agents actually deviate from their original goals and objectives.

Step 3: Access Timelines — History as Evidence

Unlike human users who maintain relatively stable access patterns, AI agents evolve rapidly.

They gain new capabilities through updates, connect to additional systems as requirements expand, and fundamentally mutate when underlying models are upgraded or replaced.

This dynamic nature makes access timelines absolutely crucial for provenance and governance.

An effective Access Timeline Should Capture:

- When the agent was initially registered in your environment

- A chronological record of access assignments and modifications highlighting intent deviation, if any

- Historical activity including queries executed, API calls made, and outputs generated

- Changes in scope, entitlements, or model versions over time

Having this data at one’s fingertips via a timeline helps organizations maintain audit readiness while also reducing attack surface exposure. So when an auditor asks you to "prove that no AI agent accessed Social Security Numbers during the last quarter," you are able to provide a complete and detailed report supporting your position.

Without timelines, you can only show a static point-in-time snapshot of current permissions. With comprehensive timelines, you demonstrate the entire history of agent behavior, definitively proving or disproving access to sensitive data.

Timelines aren't just operationally useful, they’re compliance gold during regulatory examinations.

Step 4: Continuous Intent Deviation Analysis

AI Posture Management cannot stop at configuration checks, identity inventory, or access entitlements alone because agentic systems are autonomous, probabilistic, and goal-seeking by design. An AI agent may be perfectly configured, correctly authenticated, and technically authorized, yet still introduce risk when its runtime behavior diverges from its original or approved intent.

This is where intent deviation analysis becomes essential. By continuously comparing what an agent was meant to do versus what it is actually doing across identity chains, tools, data access, and actions, posture management shifts from static assurance to behavioral risk detection. Intent deviation reveals risks that traditional posture signals cannot see, such as scope creep, emergent behavior, misaligned delegation, or goal drift introduced by model updates, prompt changes, or downstream agent interactions.

Without intent deviation, AI posture management only validates readiness at rest; with it, organizations gain continuous assurance in motion, which is the only way to effectively manage risk in autonomous, non-deterministic AI systems.

Step 5: Continuous Posture Checks & Risk Scoring

Discovery and timelines establish your foundation, but they're insufficient alone. Enterprises need continuous posture monitoring that adapts to changing conditions.

There are several risk signals organizations should be aware of, including:

- Entitlement creep: AI agents retaining unused access long after initial purpose expires

- Behavioral anomalies: Sudden spikes in data requests or queries outside normal patterns

- Intent deviations: As identified in Step 4

- Shadow integrations: Unapproved API calls to systems not in the original scope

- Unauthorized model upgrades: AI agents upgraded to different LLMs or model versions outside governance processes

All risk signals should feed into robust scoring models that function similarly to user risk scoring models, but calibrated for AI-specific behaviors.

Here’s an example: A developer agent typically queries Jira for tickets and Confluence for documentation. Suddenly, it requests access to SAP HR data—a system completely outside its normal operating parameters. The risk score spikes immediately and an automated response suspends the agent or challenges the request, requiring human approval before proceeding.

Step 6: Closing the Loop — From Risk to Remediation

Too many organizations treat posture assessment like an annual audit—a snapshot taken, findings documented, then filed away until next year. But AI agents don't stay static. They evolve, multiply, and change behavior faster than any human-driven system ever could. A mature posture management program is able to continuously tell you where your risks lie, but also help prioritize and close them.

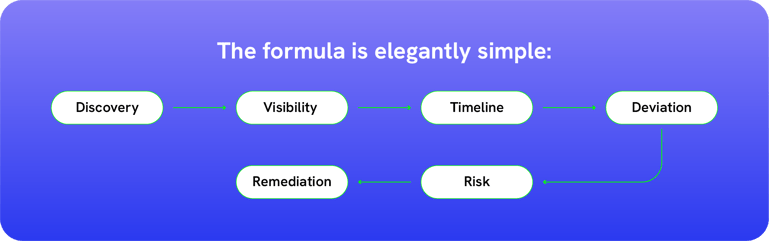

The Posture Management Loop:

- Discover AI agents across your environment

- Map their access to systems and data

- Track activity via comprehensive timelines

- Analyze intent deviations from what agents are supposed to be doing

- Score risk continuously using behavioral analytics

- Remediate by disabling rogue agents, re-certifying legitimate ones, or restricting excessive privileges

This closed-loop approach ensures that posture management remains a living discipline rather than a static snapshot. Each phase feeds into the next, creating a self-reinforcing cycle that gets smarter over time as your behavioral analytics learn what normal looks like—and what doesn't.

Case Study: Global Retailer

A global retailer piloted AI agents to optimize supply chain operations and inventory management. The initial setup registered three agents in the development environment—a controlled, monitored deployment.

Six months later, a comprehensive discovery scan revealed a startling reality: over 40 agents were now operating across production systems, with many completely unregistered in any inventory. The findings from the assessment identified that:

- 25% had excessive privileges far beyond their operational requirements

- 10% were orphaned with no identifiable owner or business justification

- Two agents remained active despite running on expired API keys that should have been rotated

By systematically remediating these issues, the retailer avoided what auditors explicitly warned could become a material compliance violation under GDPR. The posture management initiative transformed from a security exercise into a business-critical risk mitigation program.

Why CISOs Embrace Posture Management

When I present this framework to CISO’s, they consistently respond with recognition and urgency. Why does this resonate so powerfully? Because posture management directly answers the first question board members inevitably ask: "How many AI agents do we even have deployed across the organization?"

Posture visibility gives CISOs the ability to inventory all AI agents with confidence and accuracy, prioritize risks based on actual access and capability, and build a solid foundation for lifecycle management, access control, audit, and provenance

As one workshop CISO participant told me, "If you give me a dashboard showing all AI agents with their risk scores tomorrow, that becomes my Board slide. That's the conversation starter we desperately need."

Conclusion: Visibility Before Control

Posture management isn't glamorous. It doesn't involve cutting-edge AI models or sophisticated algorithms. But it is absolutely indispensable.

You cannot enforce lifecycle governance without knowing which agents exist. You cannot build effective access gateways without understanding which agents require runtime control. You cannot prove provenance without visibility into historical behavior.

That's why I maintain the position that AI security doesn't start with lifecycle management, access control, or audit capabilities. It starts with posture. You must see it before you can secure it.

Up Next: Discovery and visibility give you the map—but now you need the structure. Our next post explores Identity Lifecycle Management: how to govern AI agents from their first registration through their eventual retirement, ensuring that no agent operates without proper oversight.

Miss a post? Check out the other blogs in the series:

Related Post

Report

Saviynt Named Gartner Voice of the Customer for IGA

EBook

Welcoming the Age of Intelligent Identity Security

Press Release

AWS Signs Strategic Collaboration Agreement With Saviynt to Advance AI-Driven Identity Security

Solution Guide